/proc/cpuinfo and CPU topology for all 113 AWS instance types

I grew tired of having to launch an instance to check a few CPU flags. So I figured, I'll just do it once and for all.

Without further ado, you can see the results here. Click on instance type to see them. What's included:

- contents of

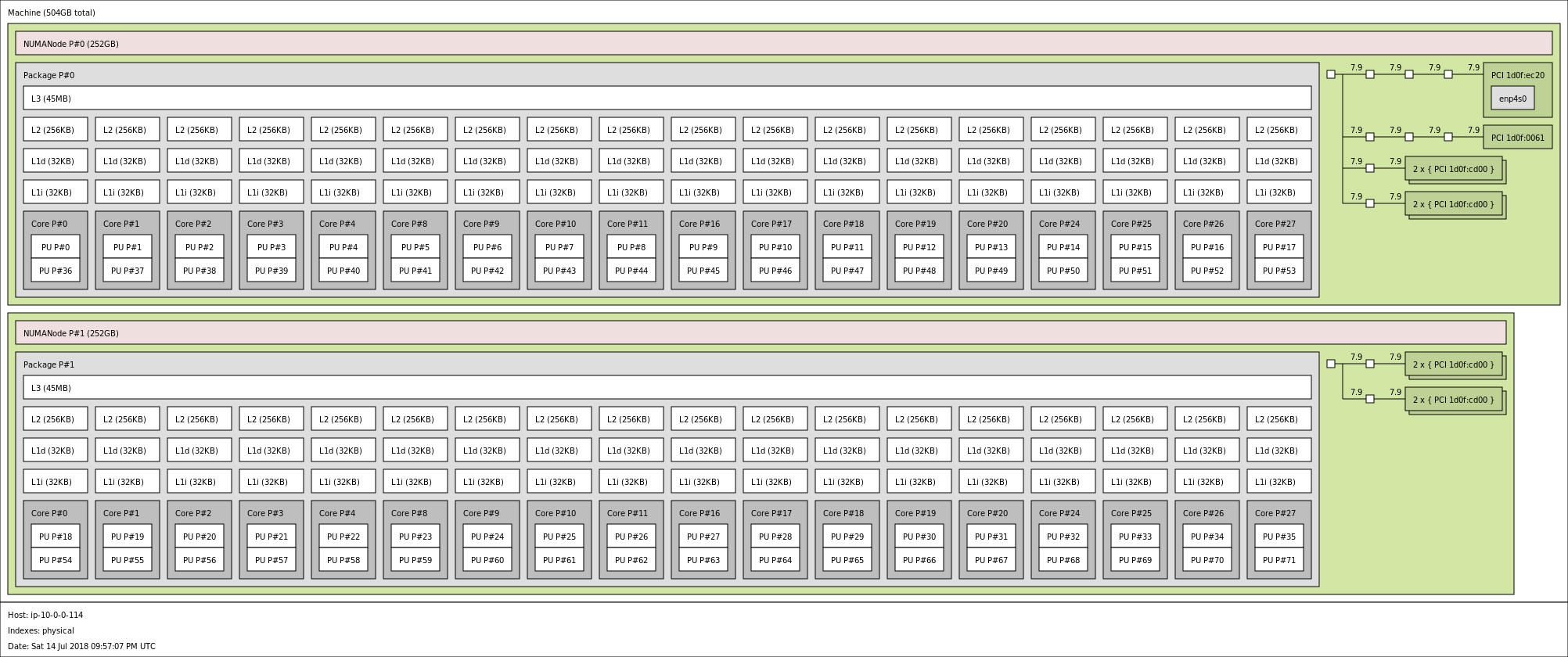

/proc/cpuinfo lstopooutput (cores, sockets, cpu caches, NUMA nodes). See the glorious i3.metal instance above.

Coming soon: nvidia-smi output for GPU instances.

A few fun things I learned doing this, in no particular order:

- AWS has whopping 113 instance types now across 27 families (82 types if you only include “current generation”).

- However, it wasn't as painful as I thought it would be, despite the variety of instances. You're generally good if using a recent enough AMI. The only issue I had is i3.metal wouldn't start with one of the old deep learning AMIs. It wasn't always that simple, for example, until recently you had to use a special AMI for p3 instances, and if you strayed from official path, had to make sure it supported ENA, SR-IOV among other things.

- Spot and spot block markets are not perfectly aligned, sometimes there is capacity in one but not the other. You can save a few bucks knowing this.

- You can still launch one of decade-old m1 instance types! However, much newer hi1.4xlarge seems to have been silently deprecated.

- Instance limits are still a bit of a pain. If you're small time user on the lowest tier of support, it takes them a couple days to respond to a limit increase request. Moreover, if you want to use spot instances, there are separate per-instance spot limits, that are not visible anywhere in the console.

- The total cost was on the order of $150, though I started this project before AWS switched to per second billing. Once they did that, it saved me quite a few bucks.